Introduction

After reading some documents, books and understood a lot of so-called

Object Oriented libraries, I noticed that everyone was in fact dealing with

a concept of the neuron that was not exactly matching the real world.

In fact, a lot of people are processing neurons in a network like an

entity that does it all... In the best of the case I experienced, the link

was present merely to provide a name under the form of a string.

In my personal view, the neuron must be partitioned correctly and each of

the component must also perform its duty correctly.

Mathematic have simulated the flow of information in a neural network...

But as the human is not mathematics nor should be the neuron's behaviour. Therefore the

mathematical approach is required only as a theorist approach and not a way

of reproducing the real world.

As I haven't been studying brain biology, how can I affirm that my way of

perception is the way connected to the reality? I cannot... But on the other

hand it is closer to the real world that it is now and I do not claim to be

the perfect real world simulated. It is only my view!

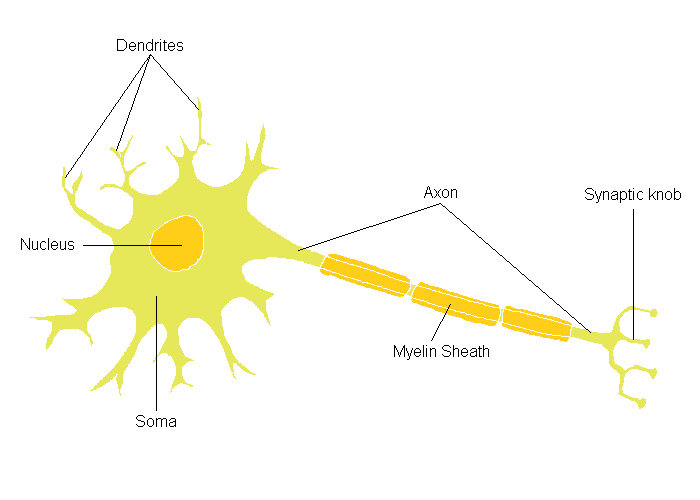

Biological Neuron

The main portion of the cell is called the soma : it contains the

nucleus, which in turn contains the genetic material in the form of

chromosomes.

Neurons have a large number of extensions called dendrites: they

often look likes branches or spikes extending out from the cell body. It is

primarily the surfaces of the dendrites that receive chemical messages from

other neurons.

One extension is different from all the others, and is called the axon: the

purpose of the axon is to transmit an electro-chemical signal to other

neurons, sometimes over a considerable distance. In the neurons that make

up the nerves running from the spinal cord to your toes, the axons can be as

long as three feet! (Longer axons are usually covered with a myelin

sheath, a series of fatty cells which have wrapped around an axon many

times. These make the axon look like a necklace of sausage-shaped beads.

They serve a similar function as the insulation around electrical wire.)

At the very end of the axon is the axon ending, which goes by the

synaptic knob: it is there that the electro-chemical signal that has

travelled the length of the axon is converted into a chemical message that

travels to the next neuron.

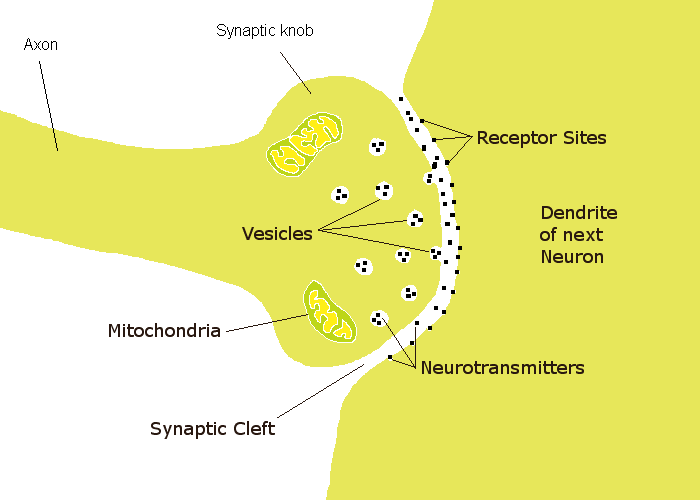

Between the axon ending and the dendrite of the next neuron is a very

tiny gap called the synapse, which we will discuss in a little bit.

For every neuron, there are between 1000 and 10,000 synapses.

When chemicals contact the surface of a neuron, they change the balance

of ions (electrically charged atoms) between the inside and outside

of the cell membrane. When this change reaches a threshold level, this

effect runs across the cell's membrane to the axon. When it reaches the

axon, it initiates the action potential.

When the action potential reaches the axon ending, it causes tiny bubbles

of chemicals called vesicles to release their contents into the

synaptic gap. These chemicals are called neurotransmitters. These

sail across the gap to the next neuron, where they find special places on

the cell membrane of the next neuron called receptor sites.

The neurotransmitter acts like a little key, and the receptor site like a

little lock. When they meet, they open a passage way for ions, which then

change the balance of ions on the outside and the inside of the next

neuron. And the whole process starts over again.

While most neurotransmitters are excitatory -- i.e. they excite the

next neuron -- there are also inhibitory neurotransmitters. These

make it more difficult for the excitatory neurotransmitters to have their

effect.

Transfer of information

A neuron receives input from other neurons (typically many thousands).

Inputs sum (approximately). Once input exceeds a critical level, the neuron

discharges a spike - an electrical pulse that travels from the body,

down the axon, to the next neuron(s) (or other receptors). This spiking

event is also called depolarization, and is followed by a

refractory period, during which the neuron is unable to fire.

Learning

Brains learn. Of course. From what we know of neuronal structures, one

way brains learn is by altering the strengths of connections between

neurons, and by adding or deleting connections between neurons. Furthermore,

they learn "on-line", based on experience, and typically without the benefit

of a benevolent teacher.

Informatics

Neuron

When we see this representation, we can start to imagine how this may

work in the term of information processing (informatics).

For an informatician (person having studied the science of processing

information), these two images are the main information the informatician is

provided

- some may go further in the biological description but I do not believe it

is required.

An informatician will decompose the neuron as followed:

- The dendrites must be directly connected with the soma - any

fluctuation in the dendrites will immediately affects the soma.

- Every neuron must be associated with a threshold: it may be seen as a

filter avoiding some false information to propagate.

- The soma has an output gate called the axon.

- Every time the soma is affected, the process must transfer to the axon

storage the residue of the threshold of the neuron. The action potential

will then transfer all information to the Axon.

- The soma contains a nucleus object. this nucleus object will have the

copy() function as main member.

- The axon is the mean the neuron has to propagate the information to

another neuron (dendrite).

- Each dendrite is connected to another neuron via the synaptic knob.

- The connection between the dendrite and the synaptic knob is the

synapse, which is a bridge for information.

An informatician will associate the following:

- INPUT: dendrite.

- Information container: Axon.

- Flow controller: membrane threshold, neurotransmitters, synapse.

- OUTPUT: synaptic knob.

The flow of information (neurotransmitters) can also be seen as followed:

As you may notice, the neurotransmitters are considered as information

and only differentiated at the axon level between excitatory and inhibitory

transmitters. As we notice the inhibitory transmitters will reduce the

amount of information that will pass in the next neurons.

Object

Oriented

Neuron

We can view the flow of information as a Soma with some functionalities

of with a dendrite/soma along with an axon/synapse which for my view is more

object than the previous. Why? mainly because one object processes one type

of information with action.

We will consider the Neuron object as a container of information.

As the synapse is mainly a gap between the synaptic knob and the dendrite

we cannot associate its functionality to the Neuron container. In fact the

Synapse() functionality will call for each synaptic knob each the dendrite

to accept the data stored in the synaptic knob.

Exhaustive Object Oriented view

The Dendrite object will

- identify the synaptic knob it is connected with.

- maintain a learning ration that will filter the amount of input

information.

The Soma object have

- a list of dendrites.

- the threshold value

- an evaluator that may trigger the action potential.

- a link to one Axon.

The Axon object have

- a list of synaptic knobs.

- a function that will compute the total of excitatory transmitters

and assign the result to the synaptic knob.

The Synaptic knob object have

- a link to the dendrite it is associated

- provide the amount of information to transmit.

Object Oriented Design

In blue (dark and light) the member that will be replaced by the

inheritor member.

Chronologic flow

Comments:

- The Soma will depolarize when the value (amount of information/ions)

is greater than a threshold.

- It will depolarize on the axon to each of the synaptic knob.

- Each of these knob will process the excitatory and inhibitory

neurotransmitters.

- Each knob will provide the receptor site of the dendrite it is linked

with with the transmitters.

- Upon reception, the dendrite will filter the amount receive by what is

common to call the weight or the strength of connection.

- The dendrite will propagate the information to the soma.

The

Different

View

When understanding the flow of information of a neuron, we notice the

information may be altered/modified in three different ways.

Selection of the participants

Selecting or choosing what neuron would participate or not in the flow of

information. We can modify dynamically the threshold of the neuron. We do

remember that if the amount of ions (information) inside and outside the

membrane reaches a threshold, the action potential is activated. As we are

dealing with a threshold value, we can easily increase that value to

dynamically select the neuron to participate a specific flow of information.

Heaving a high threshold will not activate the action potential and

therefore will block the flow of information.

We can also see the threshold of the neuron as a Bayesian trigger:

indeed, we can associate the threshold level with Probability of an event to

happen. If the neuron we are considering is an input neuron for a

specific kind of result, by increasing the threshold we alter the level of

influence some specific neuron may have in the final answer.

Contextual Information Softener

During the depolarization, the synaptic knob via the vesicles will

produce neurotransmitters. As some of them may be inhibitory transmitters

reducing the amount of excitatory transmitters to flow to the dendrite. The

synaptic knob has therefore a mean to influence/reduce the amount of

information offered to the dendrite.

We can understand the process of the vesicles as a call back that may

reduce the amount of information. E.g.. if we are crossing the road, we

interpret the noise of an approaching car as a potential danger but that

same noise se its importance reduced when we are walking on the sideway, the

same noise is neglected when we are in our garden. Therefore we can easily

understand the information is reduced by the context. The idea of a call

back

provided dynamically by the context is a nice mean to control the importance

of information

Learning stage

All the algorithms we are dealing with concentrate on the learning

factor. This factor is an amplifier or softener of the information at the

dendrite level. This is done by increasing/reducing the amount of receptor

sites. This can also be drastic as the amount may be reduce to 0, blocking a

communication.

The learning procedure is to be taken seriously because it is not

dynamic. The learning pattern represents the knowledge and to implement it

we need to minimize the difference between the amount of information that is

effectively passing and the one that should pass. The mean offered is a

factor [0,1] that will adapt the transfer of information. the idea behind is

to run the information throughout the network until we may find a suitable

value that is minimizing all the errors.

Question: Should the learning modify the receptor site with or

without any synaptic softener activated? Do we also learn in background? and

how efficient is that learning?

|